What you need to know about China's AI ethics rules

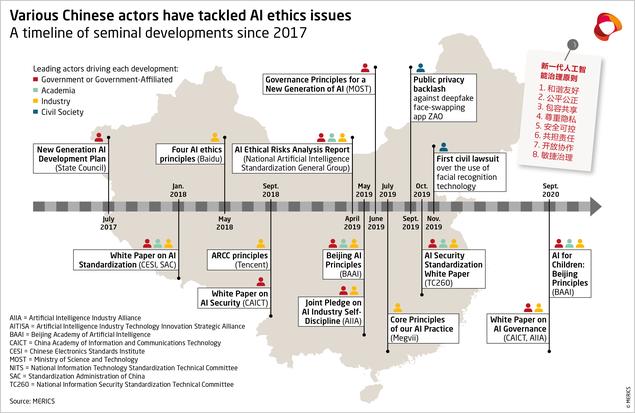

Late last year, China's Ministry of Science and Technology issued guidelines on artificial intelligence ethics. The rules stress user rights and data control while aligning with Beijing's goal of reining in big tech. China is now trailblazing the regulation of AI technologies, and the rest of the world needs to pay attention to what it's doing and why.

The European Union had issued a preliminary draft of AI-related rules in April 2021, but we've seen nothing final. In the United States, the notion of ethical AI has gotten some traction, but there aren't any overarching regulations or universally accepted best practices.

China's broad guidelines, which are currently in effect, are part of the country's goal of becoming the global AI leader by 2030; they also align with long-term social transformations that AI will bring and aim to fill the role of proactively managing and guiding those changes.

The National New Generation Artificial Intelligence Governance Professional Committee is responsible for interpreting the regulations and will guide their implementation.

Here are the most important parts of the directives.

China's proposed governance and regulations

Titled "New Generation Artificial Intelligence Ethics Specifications," the guidelines list six core principles to ensure "controllable and trustworthy" AI systems and, at the same time, illustrate the extent of the Chinese government's interest in creating a socialist-driven and ethically focused society.

Here are the key portions of the specs, which can be useful in understanding the future direction of China's AI.

General provisions

The aim is to integrate ethics and morals into the entire lifecycle of AI; promote fairness, justice, harmony, and safety; and avoid issues such as prejudice, discrimination, and privacy/information leakage. The specification applies to natural persons, legal persons, and other related institutions engaged in activities connected to AI management, research and development, supply, and use.

According to the specs, the various activities of AI "shall adhere to the following basic ethical norms":

These ethical rules should be followed in AI-specific activities around the management, research and development, supply, and use of AI.

AI management standards

The regulation specifies these goals when managing AI-related projects:

Research and development, quality, and other issues

Under the rules, companies will integrate AI ethics into all aspects of their technology-related research and development. Companies are to "consciously" engage in self-censorship, strengthen self-management, and refrain from engaging in any AI-related R&D that violates ethics and morality.

Another goal relates to improved quality for data processing, collection, and storage and enhanced security and transparency in terms of algorithm design, implementation, and application.

The guidelines also require companies to strengthen quality control by monitoring and evaluating AI products and systems. Related to this are the requirements to formulate emergency mechanisms and compensation plans or measures, to monitor AI systems in a timely manner, and to process user feedback and respond, also in a timely manner.

In fact, the ideas of proactive feedback and usability improvement are key. Companies must provide proactive feedback to relevant stakeholders and help solve problems such as security vulnerabilities and policy and regulation vacuums.

Why you should care

Keeping AI "under meaningful human control" in the Chinese AI ethics policy will no doubt draw comparisons to Isaac Asimov's Three Laws of Robotics. The bigger question is whether China, the United States, and the European Union can find commonality on AI ethics.

Without question, the application of AI is increasing. In my opinion the United States still holds the lead, with China closing the gap and the EU falling behind. This increasing use is driving many toward the idea of developing an international, perhaps even global, governance framework for AI.

When you compare the principles outlined by China and those of the European High-level Expert Group on AI, many aspects align. But the modus operandi is very different.

Let's consider the concept of privacy. The European approach to privacy, as illustrated by the General Data Protection Regulation (GDPR), protects an individual's data from commercial and state entities. In China, personal data is also protected, but, in alignment with the Confucian virtue of filial piety, only from commercial entities, not from the state. It is generally accepted by the Chinese people that the state has access to their data.

This issue alone may be something that prevents a worldwide AI ethics framework from ever being fully developing. But it will be interesting to watch how this idea evolves.