Toward Ethical and Equitable AI in Higher Education

As the higher education sector grapples with the “new normal” of the post-pandemic, the structural issues of the recent past not only remain problematic but have been exacerbated by COVID-related disruptions throughout the education pipeline. Navigating the complexity of higher education has always been challenging for students, particularly at underresourced institutions that lack the advising capacity to provide guidance and support. Areas such as transfer and financial aid are notorious black boxes of complexity, where students lacking financial resources and “college knowledge” are too often left on their own to make decisions that may prove costly and damaging down the line.

The educational disruptions that many students have faced during the pandemic will likely deepen this complexity by producing greater variations in individual students’ levels of preparation and academic histories, even as stressed institutions have less resources to provide advising and other critical student services. Taken together, these challenges will make it all the more difficult to address the equity gaps that the sector must collectively solve.

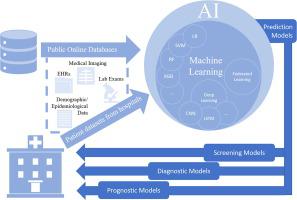

While not a panacea, recent advances in artificial intelligence methodologies such as machine learning can help to alleviate some of the complexity that students and higher education institutions face. However, researchers and policy makers should proceed with caution and healthy skepticism to ensure that these technologies are designed and implemented ethically and equitably. This is no easy task and will require sustained, rigorous research to complement the rapid technology advances in the field. While AI-assisted education technologies offer great promise, they also pose a significant risk of simply replicating the biases of the past. In the summary below, we offer a brief example drawn from recent research findings that illustrate the challenges and opportunities of equitable and ethical AI research.

Machine learning–based grade prediction has been among the first applications of AI to be adopted in higher education. It has most often been used in early-warning detection systems to flag students for intervention if they are predicted to be in danger of failing a course, and it is starting to see use as part of degree pathway advising efforts. But how fair are these models with respect to the underserved students these interventions are primarily designed to support? A quickly emerging research field within AI is endeavoring to address these types of questions, with education posing particularly nuanced challenges and trade-offs with respect to fairness and equity.

Generally, machine learning algorithms are most accurate in predicting that which they have seen the most of in the past. Consequently, with grade prediction, they will be more accurate at predicting the groups of students who produce the most common grade. When the most common grade is high, this will lead to perpetuating inequity, where the students scoring lower will be worst served by the algorithms intended to help them. This was observed in a recently published study out of the University of California, Berkeley, evaluating predictions of millions of course grades at the large public university. Having the model give equal attention to all grades led to better results among underserved groups and more equal performance across groups, though at the expense of overall accuracy.

While addressing race and bias in a predictive model is important, doing so without care can exacerbate inequity. In the same study, adding race as a variable to the model without any other modification led to the most unequal, and thus least fair, performance across groups. Researchers found that the fairest result was achieved through a technique called adversarial learning, an approach that teaches the model not to recognize race and adds a machine learning penalty when the model successfully predicts race based on a student’s input data (e.g., course history). Researchers also attempted to train separate models for each group to improve accuracy; however, information from all students always benefited prediction of every group compared to only using that group’s data.

These findings underscore the challenges in designing AI-infused technologies that promote rather than undermine the student success objectives of an institution. Further work is needed to develop additional best practices to address bias effectively and to promote fairness in the myriad of educational scenarios in which machine learning could otherwise contribute to the widening of equity gaps.

The State University of New York and UC Berkeley have launched a partnership to take on these challenges and advance ethical and equitable AI research broadly in higher education. The first project of the partnership will be applied to the transfer space, where we will be quantifying disparities in educational pathways between institutions based on data infrastructure gaps, testing a novel algorithmic approach to filling these gaps and developing policy recommendations based on the results. While this project represents an incremental step, we look forward to advancing this work and welcome partnerships with individuals and organizations with similar interests and values.

To connect with us, reach out: Dan Knox and Zach Pardos.